I've been developing with hand tracked controllers for VR for a little while now (mostly with the Oculus Touch controllers) and want to share some of the things I've learned and challenges I've encountered so far. Mostly this discussion will be about the fundamental interaction with hand controls - grabbing objects in VR.

My main project at the moment is a surgical training / simulation application, Osso VR. Here's a video giving an idea of the interactions I've implemented:

In this post I'm going to talk about the basics of grabbing. Future posts will go into more detail on some of the implementation we're using.

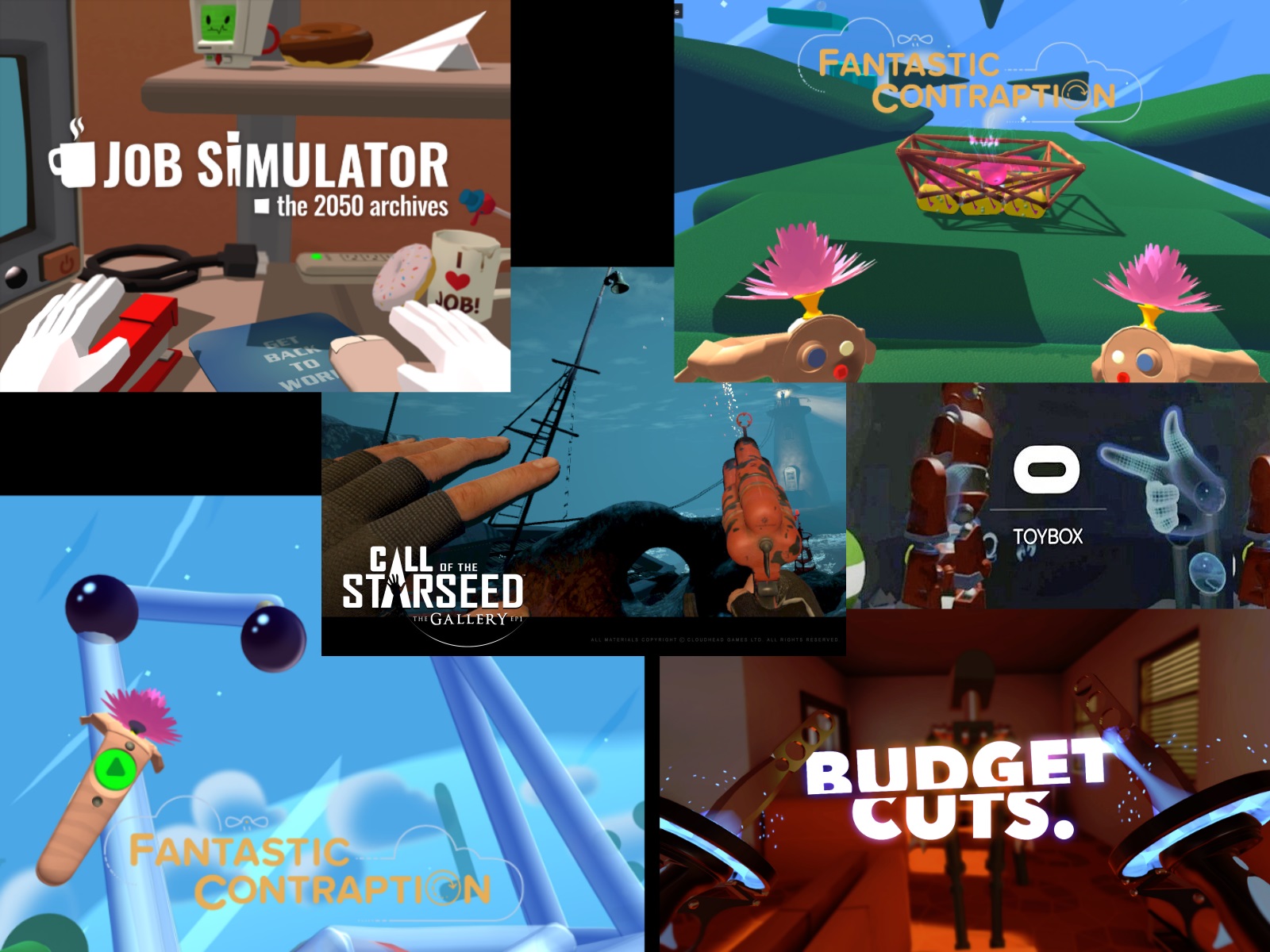

Hand tracking in VR is still fairly new as a consumer technology but there are already some good resources out there discussing it. The guys from Owlchemy Labs (who are behind the awesome Vive launch title Job Simulator) have given some great presentations on the topic. Being There: Designing Standing VR Experiences with Tracked Controllers from Oculus Connect 2 is one to check out. There were also a number of presentations at GDC 2016 which you should be able to watch if you have GDC Vault access.

One of the first questions that arises when implementing hand controls is how to represent the hands in VR. There's two common approaches to this so far: either to show virtual hands or to show representations of the controllers themselves. There are a number of examples of both approaches:

Another early question that is very much related is what to show when an object is picked up. Again there are two common approaches: either show the grabbed object along with the hand or controller or only display the grabbed object and make the hand or controller disappear when an object is picked up. The Owlchemy labs devs coined the term 'Tomato Presence' for the latter approach which they use:

As they discuss in their talk, making the hands disappear sounds like odd behavior and can look a little strange on video but feels very natural (and is often not even noticed) in VR.

The alternative approach of showing both the hands and the grabbed object is exemplified by another great Vive game, The Gallery: Call of the Starseed. This video shows some representative object interaction:

On video this arguably looks more natural but this approach introduces some additional complexities on the development side and comes with some limitations that may not be immediately obvious. One immediate issue you face if you try to implement this approach with hands is that for things to look right you need to match the hand pose displayed to the grabbed object. While this could in theory be done procedurally that would be fairly challenging technically and in practice games that I've seen take this approach handle it by offering one or a few pre-set ways to grab an object and when you grab an object they lerp it to a pre-set pose and display a matching pre-set hand pose. You can see this approach in action in the The Gallery video above.

For some objects and types of interaction (like a gun) there is one obvious way the object should be held and this approach works fairly well. For other objects (like the wine bottles in the The Gallery tutorial) things are a little more complicated. There are at least three 'natural' grips for a wine bottle, depending in part on what you plan to do with it (pour, carry, use as a weapon):

The Gallery tutorial offers multiple possible grip positions for the wine bottles and appears to select the one that is the closest match to your controller position and orientation when grabbing. This adds some additional development work for each hand position you need to support and is still not as flexible as 'freeform' grabbing which allows you to pick any relative orientation of hand to object when grabbing.

This design choice also impacts how you pass objects from hand to hand - in The Gallery transferring an object from one hand to the other causes it to lerp to a new pre-set orientation to match the grip of the opposite hand.

For our app I've opted for the Job Simulator approach. One reason for this is that we are a small team (I'm the solo developer) and the alternative requires considerably more work setting up grip positions and hand poses for each interactable object. I actually find I prefer the 'freeform' grabbing approach in most situations however. It feels more natural to me to retain the relative orientation of a grabbed object to the hand and it allows you to freely pick a suitable grip depending on what you're attempting to do with the object. The video below illustrates this approach and the flexible grip choice it offers, as well as the natural way an object can be passed from hand to hand:

The other issue that comes up early in development of hand tracked controls is what to do when the user's hand passes through a solid object in VR. Again, we mostly follow Job Simulator's lead on this: we allow the user's hand to pass through objects rather than attempting to stop it (which would decouple the visual hand position in VR from the real world position) but if the user is holding an object at the time it will break out of their grip (you can see this happen in the video above when the hammer is hit too hard against the table). We add a slight haptic pulse when this happens as a cue you have lost your grip. This works fairly well for relatively small objects but can become a little frustrating for the user when objects get large as it can be too easy to accidentally lose your grip on an object when it knocks against a solid object in the environment.

That about covers the basic issues you will need to address when first implementing hand tracking in VR and grabbing of objects. In future posts I will talk a little about some of the implementation issues in Unity and also discuss throwing objects - something that you will likely want to support as it is one of the first things most users will try when they get into a hand tracked VR experience!